Introduction

I have a project on Github that analyzes videos and/or photos for people shaped objects. After completion, it can send a text alert and/or an email with the results of what it found. This is very useful and I use it every day to analyze my exterior home surveillance footage from the previous night. I’ve recently added the ability to run that script using Tensorflow 2.2 and OpenCV 4.3 on a gpu.

As you may know, Tensorflow can run on a Cuda capable gpu(s) and sees performance improvements when doing so. My project relies on the deep neural net module provided by Open CV (cv2.dnn) and when I started this project cv2.dnn did not support Cuda. Well now it does!

If you’re willing to go down a rabbit hole with me I’ll walk you through how you can set up your machine to run people-detect.py on your Nvidia graphics card.

Disclaimer: Before getting started…

people-detect.py works fine just the way it is. The below process may be frustrating and may break things that are already working on your system. I’m not an expert in any of this and can only walk you through how I set up my own machine.

Getting Started

This tutorial will make several assumptions

- You are running Linux. This may work on Windows but I have no experience with that.

- You have a CUDA capable graphics card.

- You’re comfortable using the terminal.

- You have admin privileges on the machine you’ll be working on.

Game Plan

We’ll be carrying out the following tasks. In each section I’ll do my best to link back to the resources that helped me along the way.

- Create a Python 3.7 virtual environment (easy)

- Clone the People Detector repo (easy)

- Setting up our virtual environment (easy)

- Installing Nvidia CUDA and CuDNN drivers (medium)

- Downloading and compiling OpenCV from source (hard)

- Linking our compiled OpenCV to our python virtual environment (easy)

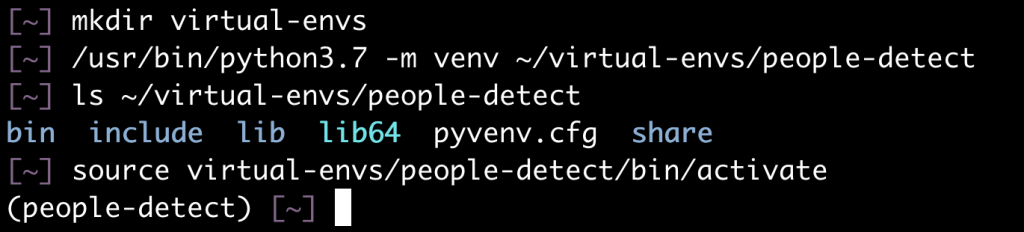

Create a Python 3.7 Virtual Environment and activate it

At the time of this writing, Python 3.7 is not the most current release. However, it is the latest release that Tensorflow supports. This will change in the future and I’ll update this post accordingly.

If Python 3.7 is not currently on your system you’ll need to install it with:

sudo apt install python3.7

or your system’s package manager equivalent. From there we need to create a directory for our virtual environment to live:

mkdir ~/virtual-envs

Now we can create our virtual environment:

/usr/bin/python3.7 -m venv ~/virtual-envs/people-detect

You should now have a folder in your ~/virtual-envs/ directory called people-detect . The last step will be to activate your virtual environment. This ensures that anything involving Python is happening in our created virtual space not on your entire system:

source virtual-envs/people-detect/bin/activate

Clone the People Detector repo

From your home directory:

git clone https://github.com/humandecoded/People-Detector.git

Set up our virtual environment

You should still see (people-detect) in your terminal. This is how you know you’re in your virtual environment.

First, we need to update pip:

pip install --upgrade pip

Next we need to install Tensorflow and cvlib

pip install tensorflow

pip install cvlib

Finally, we’ll add twilio support to our virtual environment in case we want text message alerts later:

pip install twilio

For the time being, our virtual environment is all set up. We’ll jump out of this virtual environment for right now. We want to be in our “regular” python environment for the following steps. To exit out of your virtual environment:

deactivate

Install Nvidia CUDA and CuDNN drivers

Tensorflow is very particular about which versions of CUDA it will work with. I highly reccomend following the instructions from the Tensorflow page to make this as painless as possible. Specifically the “Install CUDA with apt” section. This is the least painful of all the options and could potentially save you hours of troubleshooting.

The most up to date CUDA version from Nvidia is NOT the one that works with Tensorflow. If you already have CUDA installed you may be required to downgrade. After you are done following the Tensorflow GPU instructions you’ll most likely need to restart your machine.

Compile OpenCV from source

This part was the hardest for me and I’ll try to link to all the sources that I found useful in piecing it together. Our plan here will be to:

- Install dependencies

- Clone the OpenCV git repo

- Clone the OpenCV-contrib repo

- Compile OpenCV with a number of optional flags

For installing dependencies I found the OpenCV doc site to be the best.

sudo apt-get install build-essential

sudo apt-get install cmake git libgtk2.0-dev pkg-config libavcodec-dev libavformat-dev libswscale-dev

sudo apt-get install python-dev python-numpy libtbb2 libtbb-dev libjpeg-dev libpng-dev libtiff-dev libjasper-dev libdc1394-22-dev

git clone https://github.com/opencv/opencv.git

git clone https://github.com/opencv/opencv_contrib.gitNote: I was unable to download libjasper-dev and you may need to leave that out of your apt-get for successful download. I was able to proceed forward without it.

Now we have all the pieces in place to begin compiling our source code.

- We’re going to move our

opencv_contribfolder inside ouropencvfolder. - We’ll create a

buildfolder inside of ouropencvfolder and move in to it.

mv opencv_contrib opencv/

cd opencv

mkdir build

cd buildMake sure you’re NOT in your virtual environment for this step.

Now we run cmake with all of our optional flags. Full disclosure: All of these flags might not be necessary but the end result worked for me. You can copy and paste this entire block of code in to your terminal:

cmake -D CMAKE_BUILD_TYPE=RELEASE \

-D WITH_CUDA=ON \

-D ENABLE_FAST_MATH=ON \

-D CUDA_FAST_MATH=ON \

-D WITH_CUBLAS=ON \

-D WITH_CUDNN=ON \

-D WITH_LIBV4L=ON \

-D WITH_V4L=ON \

-D WITH_GSTREAMER=ON \

-D WITH_GSTREAMER_0_10=OFF \

-D WITH_QT=ON \

-D WITH_OPENGL=ON \

-D OPENCV_DNN_CUDA=ON \

-D BUILD_opencv_python2=OFF \

-D BUILD_opencv_python3=ON \

-D BUILD_TESTS=OFF \

-D BUILD_PERF_TESTS=OFF \

-D OPENCV_ENABLE_NONFREE=ON \

-D OPENCV_EXTRA_MODULES_PATH=../opencv_contrib/modules ..Scroll up through the results of cmake. You should see where your CUDA driver was found. Some things will not be found but that’s ok. You should get a message at the end that is error free. Next we will compile our source code.

make -j6I used make -j6 because my cpu has 6 cores. Adjust your number accordingly. This part will take a while. On my machine it hung at 97% for quite some time before completing. Also worth noting: at the end of the compilation we will NOT be installing.

And this one from the person that first tipped me off to CUDA in cv2.dnn

Link the OpenCV build to our Python Virtual Environment

The last step will be to place a link in our virtual environment folder back to our OpenCV build. We’ll navigate to our virtual environment directory to make this easier.

cd ~/virtual-envs/people-detect/lib/python3.7/site-packages

ln -s ~/opencv/build/lib/python3/cv2.cpython-36m-x86_64-linux-gnu.so cv2.soSummary

Your python virtual environment should now be able to import cv2 in the virtual environment we set up and use the version of cv2 we compiled with CUDA capability. You can now use people-detect.py with the --gpu flag and see a noticeable increase in the speed of your analysis.

Example:

source ~/virtual-envs/people-detect/bin/activate

python ~/People-Detector/people-detect.py -d <path to folder> --gpu