Today we'll have a look at "Linkook". Linkook is a tool that not only checks for the existence of usernames and emails across different sites but also can connect related but different usernames together. Let's dive in and see what we can learn.

tl;dr What did I learn?

When you query a url for a given username you may be getting back way more useful data than you realize. Some sites may include additional handles or emails associated with that account. By taking full advantage of the information being returned to you it's possible to uncover accounts or usernames with a different naming convention than the search string you started with.

Tool Details

- Author: JackJuly

- Tool: https://github.com/JackJuly/linkook

- Language: Python

- Requirements: Requests, Colorama

Questions

- How is the tool checking for emails and usernames on sites?

- How is the tool connecting linked accounts together across platforms?

From previous posts, I've outlined my methodology as "Read their docs" -> "Understand dependencies" -> "Look for calls to external sites" -> "Ask AI".

Documentation

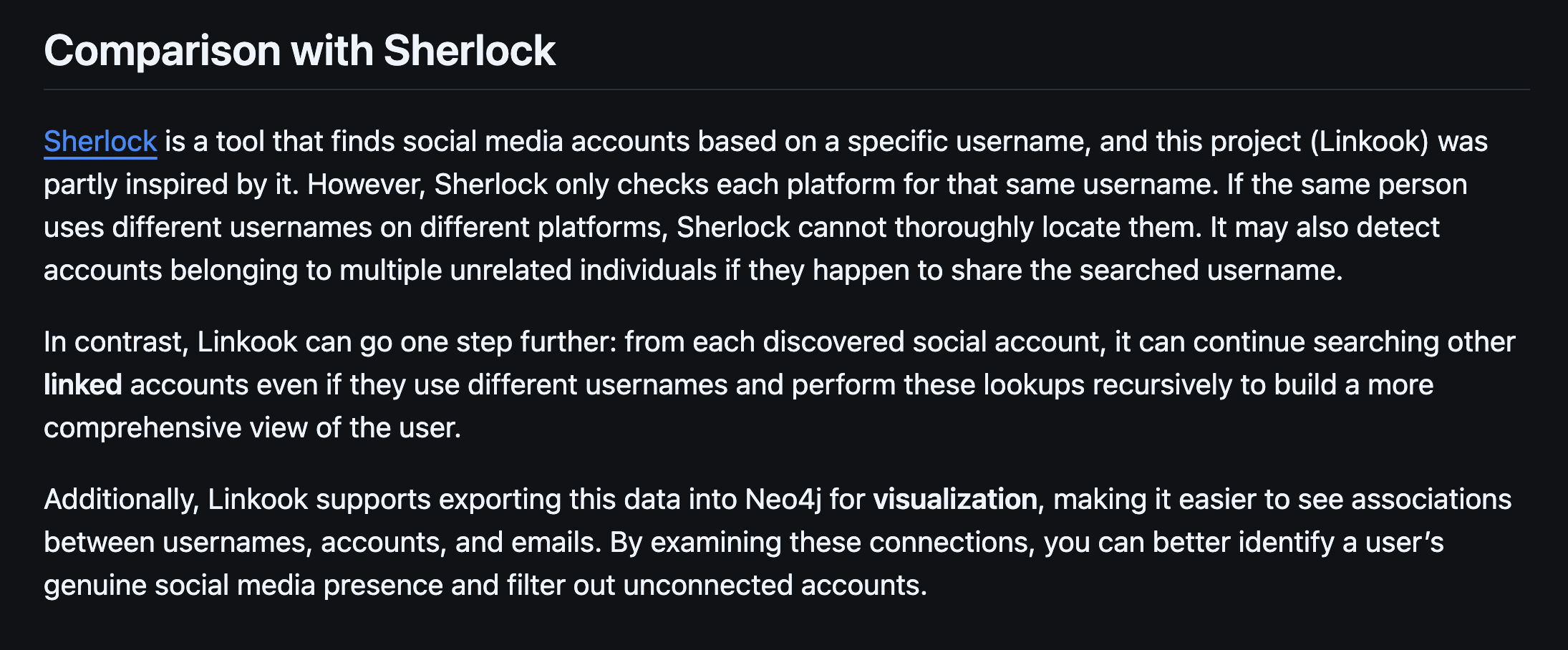

The README is well written and very concise. The tool itself seems straight forward to use with some useful option as well. While the README doesn't give us any insight into our questions above it does give us insight in to the tool itself:

We also learn that a user can force the use of a local file of sites to check implying the default is to use a remotely maintained list?

I'm specifically curious how it achieves "...searching other linked accounts even if they use different usernames and perform these lookups recursively..."

Understand Dependencies

I was a little surprised to see so few dependencies. colorama for displaying text in the terminal and requests for reaching out to other sites. The lack of something like beautifulsoup4 or selenium tells me there may not be web scraping the way I'm used to it but will instead rely on the responses and data received via requests.

Look for calls to external sites

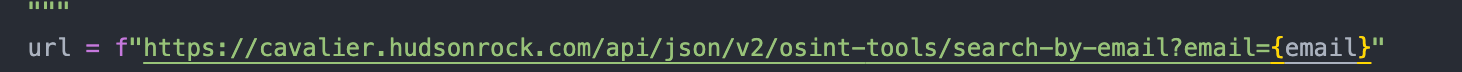

Since the tool uses requests and is meant to check for the existence of users on those sites we can expect to see calls out to sites like github.com and we know from the docs that it will reach out to Hudson Rock but we can still check for any other interesting API calls.

It is interesting to know that Hudson Rock has a public facing API that checks for breaches and looks like no API key required

Question 1: How is the tool checking sites for the existence of usernames or emails?

Using providers.json, the tool knows "what to expect" for each site. Based off the regex structure it knows "how to ask" the site for the given username. Based off the status code and contents returned it decides if the user exists on that platform or not.

For example:

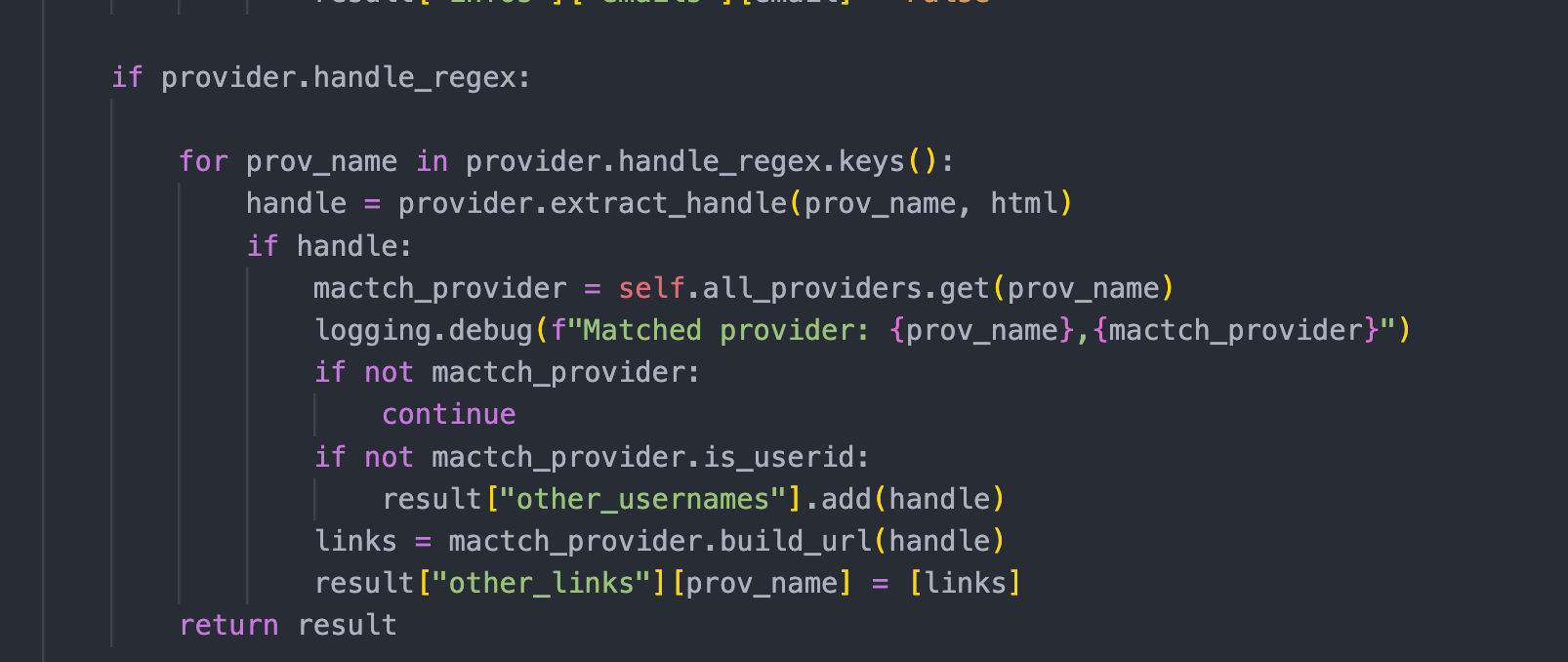

Question 2: How is the tool connecting different accounts together across sites?

While it's checking the first "layer" of existence on each site in provider.json it is also looking for other things on the page that could be other account names. If it finds any, it performs the exact same check on those usernames and again checking for yet more usernames and so on. The script completes once the recursion has finished.

But how?

The real magic happens in the search_in_reponse function. Based on the attributes defined in providers.json this function will check through the results or the requests call looking for other emails, usernames or links to other sites. For example, the Hacker One definition contains guidance on how to search for other handles on the page.

So by taking full advantage of the data being sent back from the requests call and using the magic of recursion this tool is maximizing what used to just be a simple yes/no check.

Awesome!